Overview

The Shark Lab Oceanic Research Platform, or SLORP, is a solar-powered shark detection buoy that aims to enable the efficient, reliable, and timely gathering of shark data, allowing researchers to learn more about these magnificent animals. SLORP regularly collects relevant telemetry from external sensors and uploads data to the cloud, where the data are analyzed and displayed on a user-friendly front end website. SLORP’s expandability and ingenuity will not only change the way researchers think about gathering data and collaborating on projects, but will help promote an accurate understanding of sharks.

Our Team

Kevin Dixson

Embedded System Developer

I am the Computer Engineering graduate who developed all of the software that runs onboard the SLORP buoy, which monitors various sensors, parses data, and uploads information to the AWS cloud.

Tegan Fleishman

Full Stack Developer

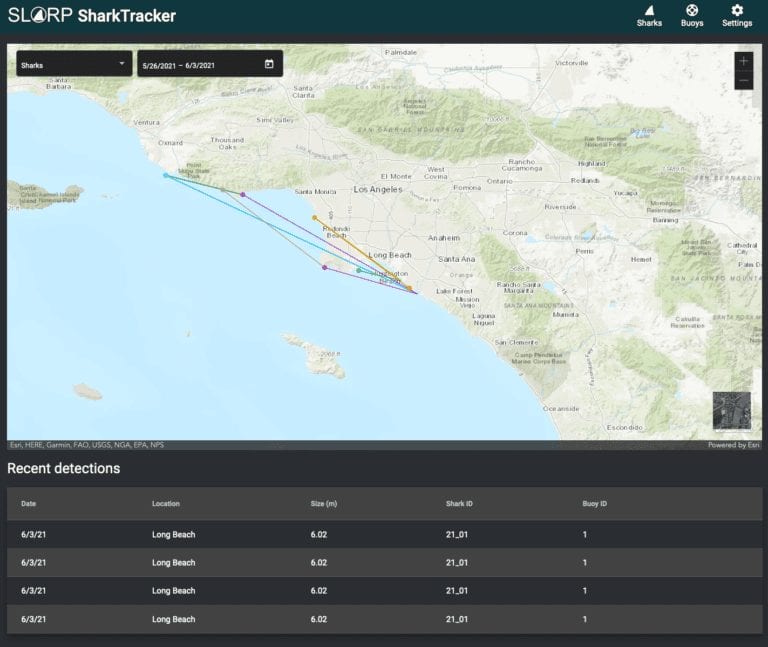

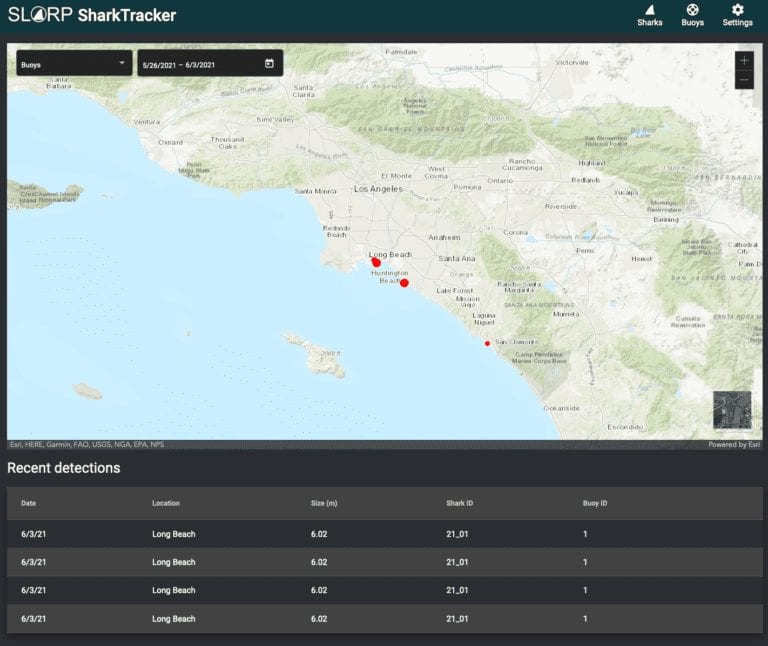

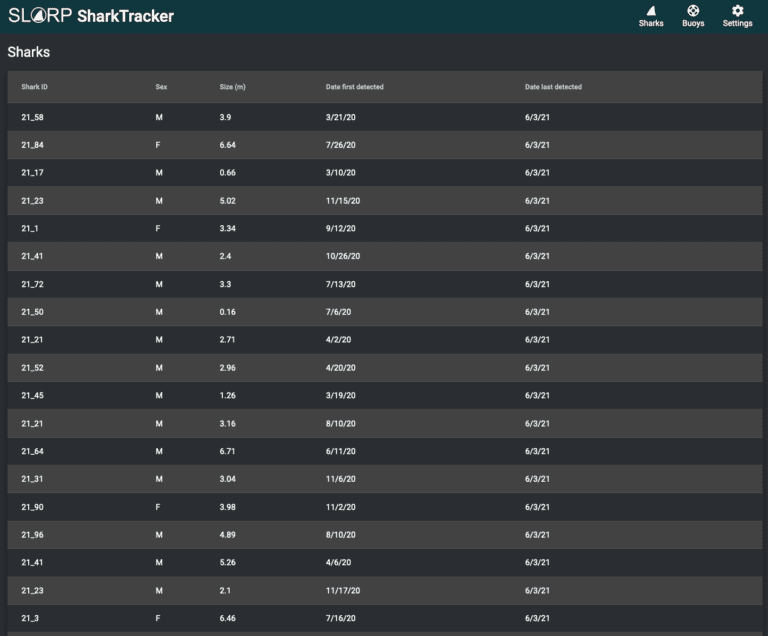

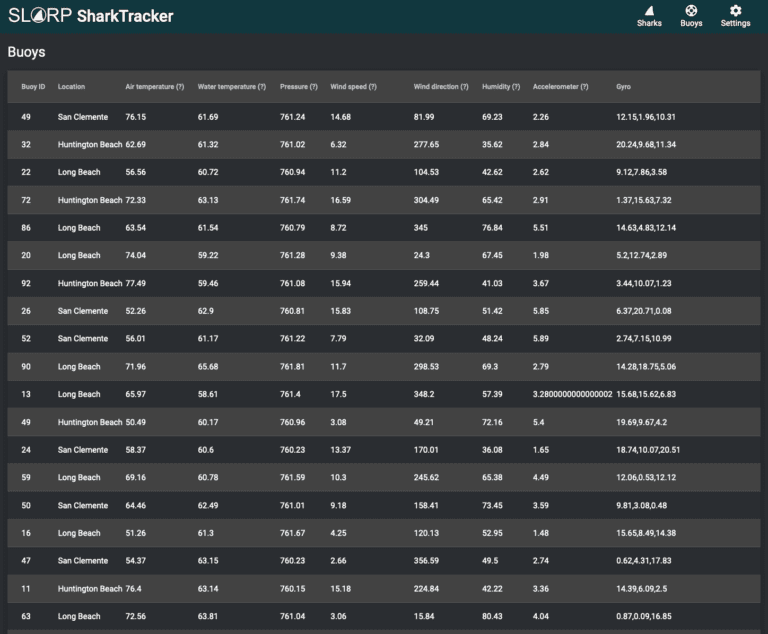

I am the Computer Science graduate who built the “Shark Tracker” website that displays data gathered from the SLORP buoy. The website shows multiple views of the data, including historical shark paths, per-buoy shark detection counts, and metadata about individual sharks and buoys.

Darnell Gadberry

Shark Lab Systems Architect

I am the architect, and lead developer of the CSULB Shark Lab Oceanic Research Platform (SLORP). I manage development teams, create specifications, and write technical documentation for the project.

Acknowledgements

Dr. Bridget Benson and Dr. Lubomir Stanchev from Cal Poly advised this project for Kevin Dixson and Tegan Fleishman, respectively. Darnell Gadberry provided significant input, guidance, and direction throughout the entirety of the project, and we are extremely grateful for his help.

Poster

SLORP Buoy Overview

Onboard the buoy, there are two sensors, one main computer, one peripheral computer, and three solar panels connected to one battery power source. The sensors gather atmospheric and oceanic telemetry and send them to the main computer. The main computer receives these messages, parses them, and repackages them before sending them to AWS. Once the messages arrive in AWS, they are uploaded to a database, which the Shark Tracker website queries, parses, and displays in a format requested by the end user.

Buoy Hardware

primary computer

The primary computer is a Raspberry Pi 4B which collects data from the two sensors: a subsurface acoustic receiver and an atmospheric weather station. The acoustic receiver reads the water temperature, listens for nearby shark tags to pass, and uploads data to the Raspberry Pi 4. The weather station collects a wide variety of data including air temperature, GPS coordinates, acceleration, and much more. These data are regularly delivered to the Raspberry Pi 4, which packages the data and sends it to the cloud.

Secondary computer

The secondary computer is a Raspberry Pi Zero W. This computer’s job is to act as a gateway to the internet for the primary computer. Since the primary computer is inside a metal enclosure, radio frequencies can’t penetrate the main buoy well. Therefore, the secondary computer sits inside a hermetically sealed plastic enclosure outside the main buoy well where radio signals, such as LTE, can communicate with the outside world. The secondary computer connects over USB to the main computer and is configured to be an ethernet gadget. This means that the main computer sees the secondary one as a device on a local subnet. All permissible traffic coming from the main computer gets routed through an LTE modem attached to the secondary computer, and the data goes to its final destination on the internet.

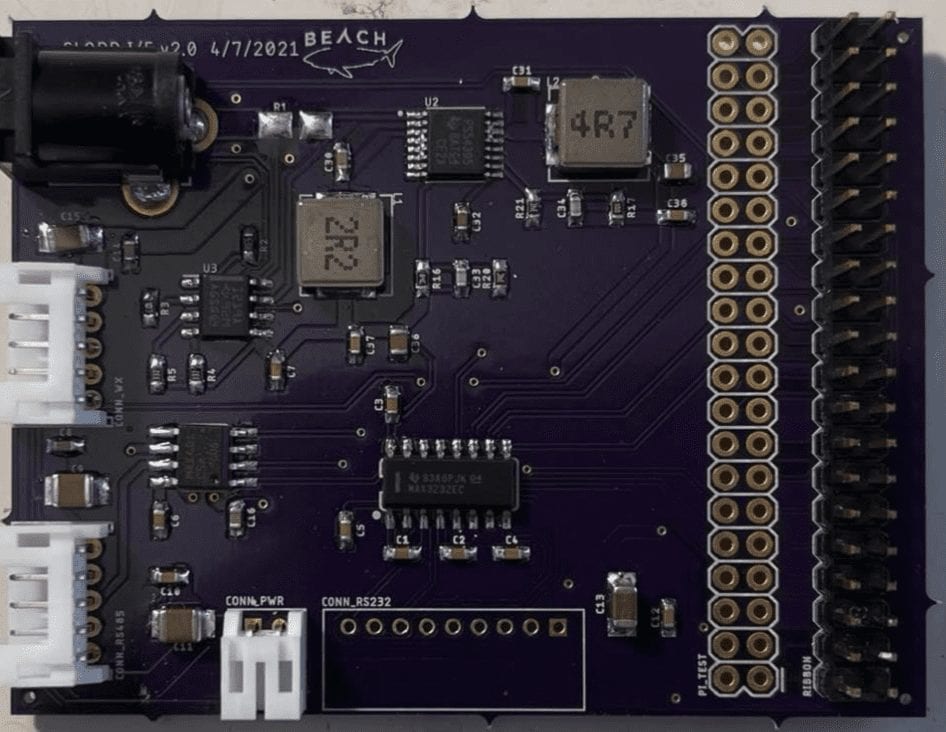

Custom Hardware

The central hardware challenge of the SLORP project was the sensors. Both the weather station and the acoustic receiver communicate using communication protocols that are not immediately compliant with a Raspberry Pi. The weather station uses the “RS232” protocol and the acoustic receiver uses the “RS485” protocol. The custom PCB we designed takes the solar panel’s output voltage and converts it to 5V and 3.3V in order to power both computers and the sensors. The board also does the voltage level conversions between each sensor and the main computer.

CSU Long Beach Shark Lab

Thank you to the CSULB Shark Lab for the incredible support and mentorship throughout this project.

Amazon Web Services

Amazon Web Services (AWS) is a powerful cloud-based product that offers a wide range of services. From managing our fleet of buoys to delivering data for our website, AWS was a central part of our design. Not only did it allow us to spin up resources with just a few clicks, but it gives us access to state-of-the-art hardware to run very fast and scalable computations.

Amazon Web Services (AWS) is a powerful cloud-based product that offers a wide range of services. From managing our fleet of buoys to delivering data for our website, AWS was a central part of our design. Not only did it allow us to spin up resources with just a few clicks, but it gives us access to state-of-the-art hardware to run very fast and scalable computations.

AWS Greengrass

‘AWS Greengrass is similar to a “hypervisor” style OS that runs all of our code onboard the buoy. The vast majority of the code running on the main computer is managed by Greengrass. For every general task, such as publishing shark detection events, there is a function invoked by the Greengrass manager upon a message’s arrival from the acoustic receiver. Once the function completes, it goes to sleep and saves power while idling.

The benefits of Greengrass are far reaching, but the most powerful ones are Over-The-Air updates, secure SSH tunnels, and easy connectivity to the AWS cloud. Specifically, when a function runs on a sensor event, we can see when it executed, if it succeeded, and what happened to the data once it completed. If the component did not succeed, then we can easily fix a bug and deploy a new version of the code in a few minutes without having to physically interact with the buoy, or even disturb other components that may be running in the background.

AWS IoT Core

AWS IoT Core was leveraged to connect our IoT devices (buoys) to the AWS cloud. The service allows for two-way communication between the buoys and our server. When sensor data comes into the main computer, it gets parsed and then uploaded to the cloud via IoT Core. These messages are then available on the cloud for any of our services to read.

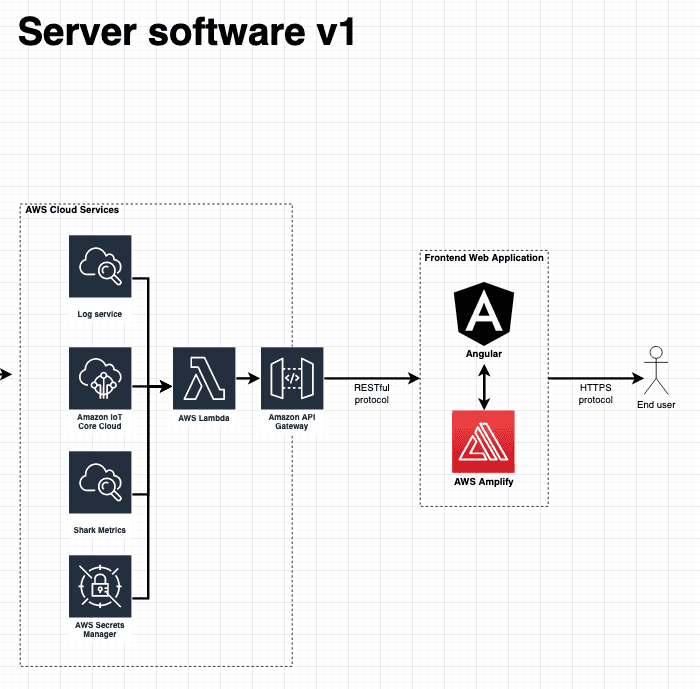

AWS Lambda

AWS Lambda is a “serverless compute service that lets you run code without provisioning or managing servers,” and it was instrumental in helping us scale our back-end very quickly. By using Lambda functions instead of our own hardware, we were able to perform large computations on our data at very high speeds, all over the cloud.

AWS API Gateway

AWS API Gateway acts “as the front door for applications to access data, business logic, or functionality from your backend services.” We used this service to handle all data retrieval calls from our website, which in turn calls AWS Lambda and other AWS services to grab the data we need.

Shark Tracker Website

Website Software Overview

The Shark Tracker website was built using multiple technologies and frameworks. We experimented with multiple options for each feature of the website, and the technologies below represent a balance of the best technology available and technologies we already had expertise with on our team.

Angular was used as the Single Page Application (SPA) that runs the front end portion of the website.

AWS was used as the back end service for all data retrieval, website hosting, and authentication layers.

ArcGIS is a powerful industry-standard mapping tool, and it’s what we used for the website’s map features.

Website Software Diagram